An AAPM Grand Challenge

Overview

Overview

The American Association of Physicists in Medicine (AAPM) is sponsoring a “Grand Challenge” on deep-learning for image reconstruction leading up to the 2021 AAPM Annual Meeting. The DL-sparse-view CT challenge will provide an opportunity for investigators in CT image reconstruction using data-driven techniques to compete with their colleagues on the accuracy of their methodology for solving the inverse problem associated with sparse-view CT acquisition. A session at the 2021 AAPM Annual Meeting will focus on the DL-sparse-view CT Challenge; an individual from each of the two top-performing teams will receive a waiver of the meeting registration fee in order to present their methods during this session. Following the Annual Meeting, challenge participants from the five top-performing teams will be invited to participate in a challenge report.

Background

Background information on the DL-sparse-view CT challenge can be found in the article “Do CNNs solve the CT inverse problem?” [1], which spells out the necessary evidence to support the claim that data-driven techniques such as deep-learning with CNNs solve the CT inverse problem. Recent literature [2,3,4] claims that CNNs can solve inverse problems related to medical image reconstruction. In particular, references [2,4] claim that CNNs solve a specific inverse problem that arises in sparse-view X-ray CT. These papers and other related work have gained wide-spread attention and hundreds of papers have followed that build on this approach. Evidence for solving the CT inverse problem can take the form of numerical simulations where a test simulated image can be recovered from its ideal projection (i.e. no noise or other data inconsistencies). In Ref. [1], such experiments were attempted using our best guess at implementing the methodology in Refs. [2,4]. While the CNN results achieved a certain level of accuracy, these results fall short of providing evidence for solving the associated inverse problem.

We do, however, acknowledge that there has been much development in this field over the past few years and it stands to reason that there are likely data-driven approaches superior to the one that we implemented. The purpose of this challenge is to identify the state-of-the-art in solving the CT inverse problem with data-driven techniques. The challenge seeks the data-driven methodology that provides the most accurate reconstruction of sparse-view CT data.

[1] E. Y. Sidky, I. Lorente, J. G. Brankov, and X. Pan, “Do CNNs solve the CT inverse problem?”, IEEE Trans. Biomed. Engineering (early access: https://doi.org/10.1109/TBME.2020.3020741), 2020.

Also available at: https://arxiv.org/abs/2005.10755

[2] K. H. Jin, M. T. McCann, E. Froustey, and M. Unser, “Deep convolutional neural network for inverse problems in imaging,” IEEE Trans. Image Proc., vol. 26, pp. 4509–4522, 2017.

[3] B. Zhu, J. Z. Liu, S. F. Cauley, B. R. Rosen, and M. S. Rosen, “Image reconstruction by domain-transform manifold learning,” Nature, vol. 555, pp. 487–492, 2018.

[4] Y. Han and J. C. Ye, “Framing U-Net via deep convolutional framelets: Application to sparse-view CT,” IEEE Trans. Med. Imag., vol. 37, pp. 1418–1429, 2018.

Objective

The overall objective of the DL-sparse-view CT challenge is to determine which deep-learning (or data-driven) technique provides the most accurate recovery of a test phantom from ideal 128-view projection data with no noise. To this end, we will provide 4000 data/image pairs based on a 2D breast CT simulation that are to be used for training the algorithm. How these 4000 pairs are split into training and validation sets is up to the individual participating teams. After the training period is over, testing data will be provided for 100 cases without the corresponding ground truth images. Participants will submit their reconstructed images for these testing cases.

Get Started

- Register to get access via the Challenge website

- Download the data/image pairs after approval

- Train your image reconstruction algorithm

- Download the testing data

- Submit your results

Important Dates

- Feb 28, 2021: Grand challenge website launch

- Mar 17, 2021: Training set release

- Mar 31, 2021: Release of single validation set for the leaderboard

- May 17, 2021: Testing set release

- May 31, 2021: Final submission of test results (midnight UTC, 5pm PST)

- June 24, 2021: Top two teams invited to present at challenge symposium

- July 25-29, 2021: Grand Challenge Symposium, AAPM 2021 Annual Meeting

- August 2021: Top five teams invited to participate in the challenge report and datasets made public

Challenge Data

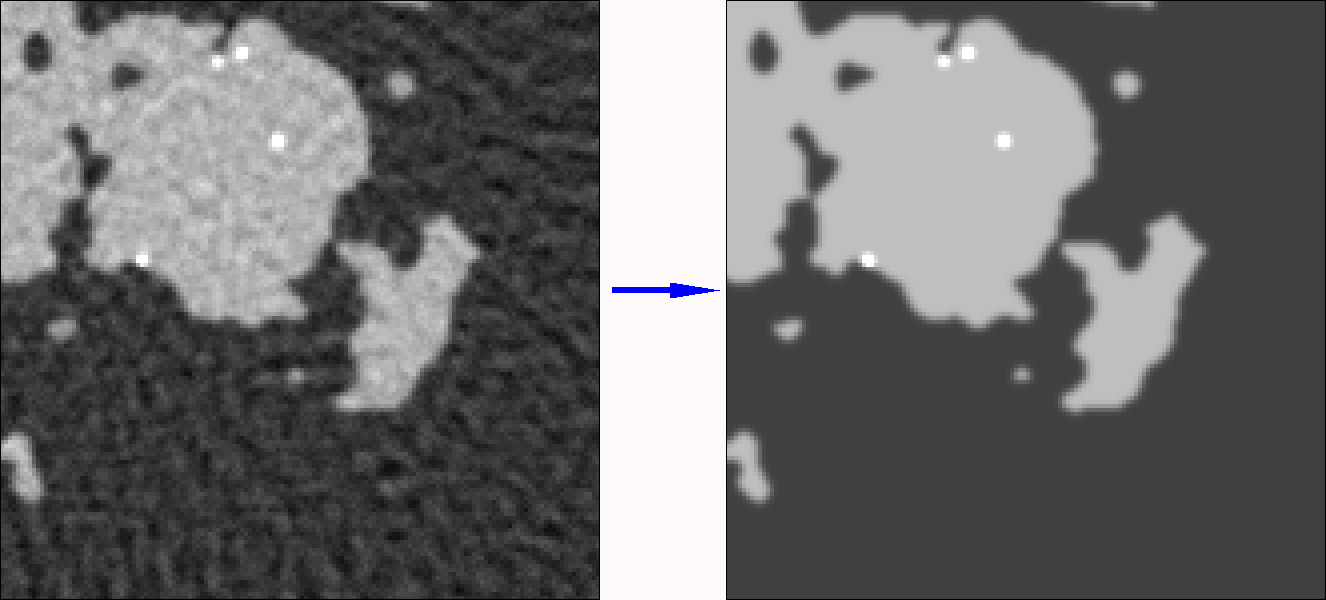

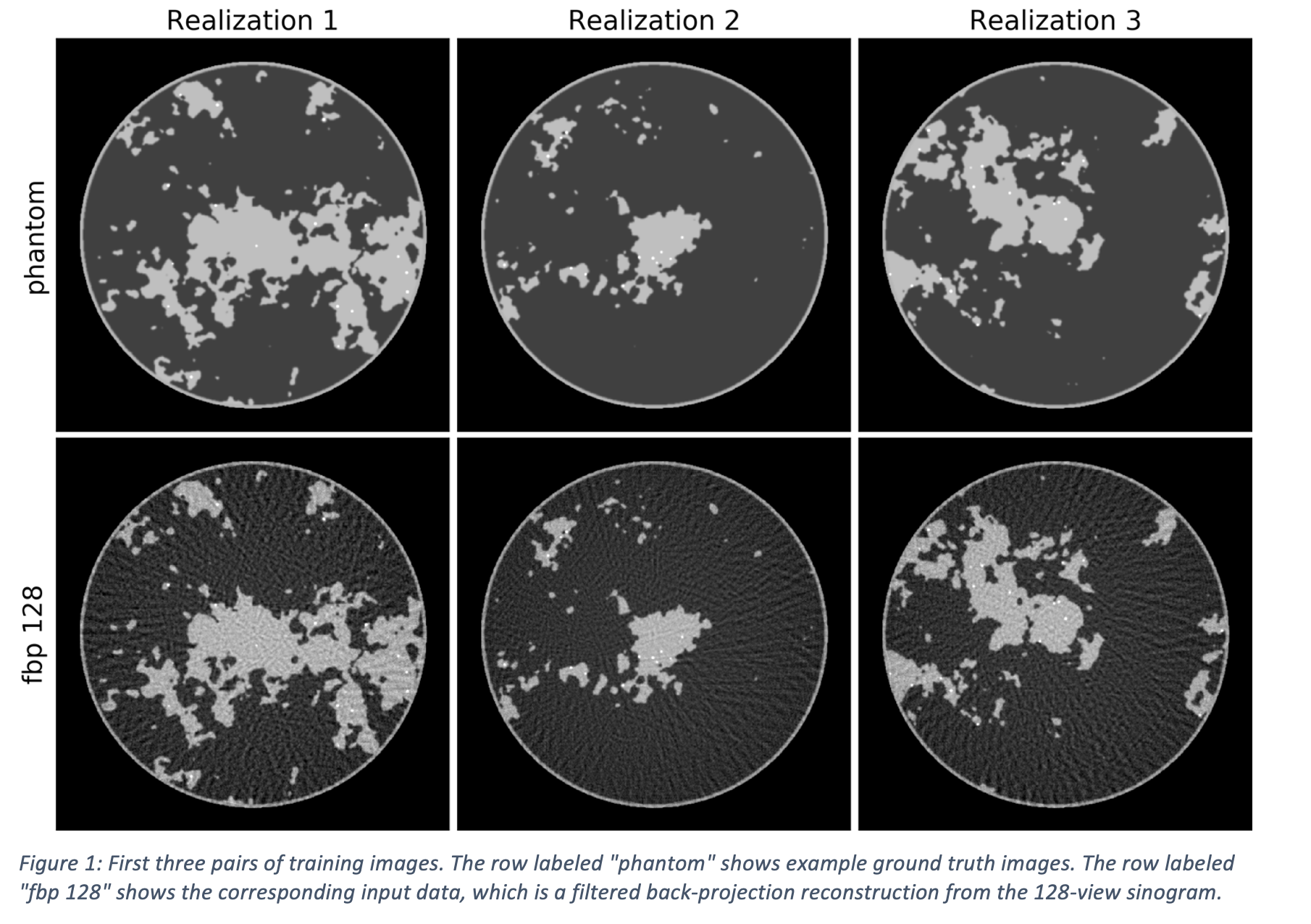

The details of how the simulation images and data are generated are provided in Ref. [1]. The 512x512 pixel training images are from a breast phantom simulation that has complex random structure modeling fibroglandular tissue in circular cross-section of a breast model (see Figure 1). There are differences in the challenge simulation with respect to Ref. [1]; in the challenge simulation a variable number of microcalcification-like objects of variable amplitude are inserted into the phantom and the scan configuration is fan-beam instead of parallel beam. We will generate 4,000 new training images. The 4,000 training images are accompanied with 128-view fan-beam beam projection data (sinograms) over 360 degree scanning and images generated with filtered back-projection (FBP) applied to the 128-view data.

DL investigators can choose whether they will take an end-to-end (128-view sinogram to image), an artifact-removal (128-view FBP image to image) approach, or some combination of the two.

In the training phase, the DL investigators will have access to the 4,000 training images, sinograms/FBP-images. In the testing phase, 100 new data-image pairs will be generated and the participants will be given only the data in the form of the 128-view sinograms and FBP images. Rankings in the DL-algorithms will be based purely on RMSE over the 100 testing images. During the training phase, a single validation data set will be released for the purpose of creating a leaderboard, which will be visible only from March 17th to May 17th, the day when the testing set is released. Final results test results may not reflect the leaderboard ranking because the final testing phase depends on RMSE performance over a test set containing 100 images. Teams can only submit one set of results, and the submission is expected to be accompanied by a technical note describing the DL-algorithm. The top two performers will be expected to participate in preparing a publication that analyzes and summarizes the results and provides a description of the methodology.

Quantitative evaluation

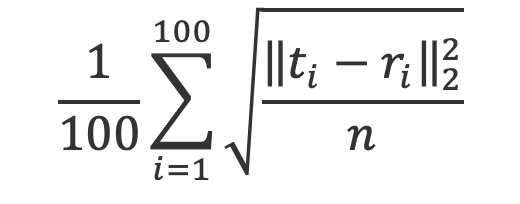

Submitted reconstructed images will be evaluated by computing

- Root-mean-square-error (RMSE) averaged over the 100 test images:

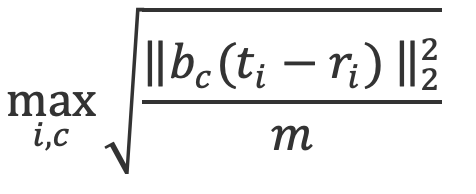

where r and t represent the reconstructed and test images, respectively, and n is the number of pixels in a single image. - Worst-case 25x25 pixel ROI-RMSE over all 100 test image

where bc is a masking indicator function for the 25x25 ROI centered on coordinates c in the image, m=625 is the number of pixels in the ROI.

Mean RMSE will be the primary metric that determines the algorithm ranking. In case of a numerical tie (to be determined based on the distribution of results with the mean RMSE), the worst-case ROI-RMSE will be used to rank the algorithms.

Results, prizes and publication plan

At the conclusion of the challenge, the following information will be provided to each participant:

- The evaluation results for the submitted cases

- The overall ranking among the participants

The top 2 participants:

- Will present their algorithm and results at the annual AAPM meeting

- Will receive complimentary registration to the AAPM meeting

- Will receive a Certificate of Merit from AAPM

A manuscript summarizing the challenge results will be submitted for publication following completion of the challenge.

Terms and Conditions

The DL-sparse-view CT challenge is organised in the spirit of cooperative scientific progress. The following rules apply to those who register a team and download the data:

- Anonymous participation is not allowed.

- Entry by commercial entities is permitted, but should be disclosed.

- Once participants submit their outputs to the DL-sparse-view CT challenge organizers, they will be considered fully vested in the challenge, so that their performance results will become part of any presentations, publications, or subsequent analyses derived from the Challenge at the discretion of the organizers.

- The downloaded datasets or any data derived from these datasets, may not be given or redistributed under any circumstances to persons not belonging to the registered team.

- The full data, including reference data associated with the test set cases, is expected to be made publicly available after the publication of the DL-sparse-view CT Challenge. Until the official public release of the data, data downloaded from this site may only be used for the purpose of preparing an entry to be submitted for the DL-sparse-view CT challenge. The data may not be used for other purposes in scientific studies and may not be used to train or develop other algorithms, including but not limited to algorithms used in commercial products.

Organizers and Major Contributors

- Emil Sidky (Lead organizer) (The University of Chicago)

- Xiaochuan Pan (The University of Chicago)

- Jovan Brankov (Illinois Institute of Technology)

- Iris Lorente (Illinois Institute of Technology)

- Samuel Armato and the AAPM Working Group on Grand Challenges

Contact

For further information, please contact the lead organizer, Emil Sidky.